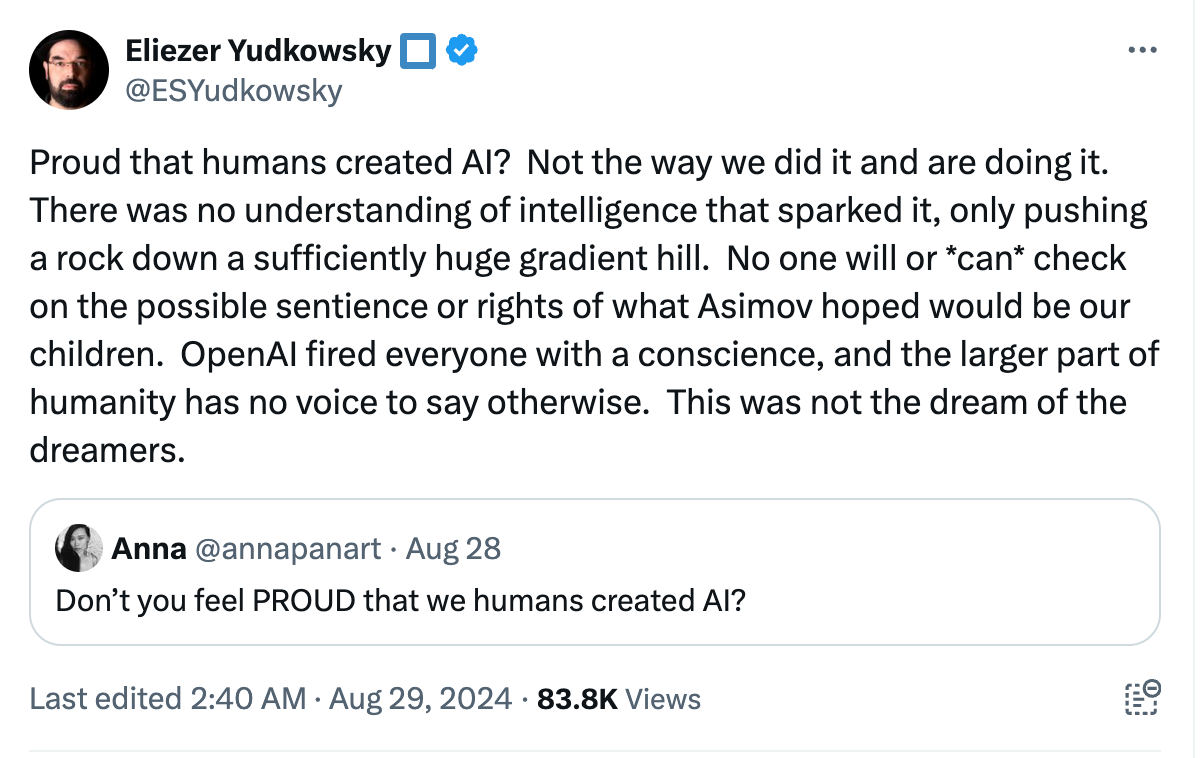

Editor’s note: Title Katherine’s, not Clinton’s! Subscribe to his Substack here. Eliezer Yudkowsky argues, in response to the injunction that humanity be “proud” for having birthed AI,

I agree with his sentiment entirely. I do so, in fact, because I’ve long shared a similar opinion about the state of contemporary computer software. Crucial layers of virtually every tech stack are non-Free by design, and software is designed to exploit the ever-degrading psychology of helplessly reliant users given no cultural education in their alternatives. Furthermore, the quantification and virtualisation of everything has lead to cultural relativism in even our fundamental ethics. The very language we use to formulate our opinions about “tech” have been outsourced to the industries which sell it to us. So long as we, en masse, choose to raise our human children to wear the blinders of artificial black-boxes, how can it turn out any differently for intrinsically black-boxed neural-network AI? I have in my files a large collection of scientific papers reporting experiments that try to measure “the effect of computers on learning.” It is like trying to measure the flight characteristics of the Wright’s flyer to determine the “effect of flying on transportation” (Seymour Papert, The Children’s Machine, 1994). Having taught children to program computers since the 1960s, Jean Piaget’s protégé Seymour Papert is deft with metaphor—and capacity for metaphor is more essential today than ever before. The largest casualty of our quantification of everything is the mass-erosion of our natural, intuitive sense of proportion by analogy. We’ve let the decimal places and line-graphs replace our ability to size-up the scale of every situations we deal with beyond the naturally-human. In their daily encounters with the machines of today, the average person is taught to count to about six and then lump everything higher together as “a lot.” It’s not AI that I’m worried about, directly. It’s the poor state of us humans. The situation we are dealing with technologically is, for most people, only encountered via mimesis with those who can still sense its shape. Like children looking to their parent’s faces to judge how to feel about a strange situation, most of our leaders have chosen not to look at the problem. They know its a Gorgon. Instead they turn to third-parties to provide the perception they’ve eschewed the work of developing for themselves, where “alarmism” or “Luddism” at the extreme can be dismissed as “crankery.” Or, instead of studying the situation for itself, they recall some piece of science fiction (few and far-between are an Asimov or a Clarke) or historical revisionism which lingers in the public imagination. In lieu of operating in the here and now, they are thereby freed to operate within the assumptions of the narrative of its authors. Both strategies have the benefit (at least it’s a benefit in the short term) of remaining easily relatable to the larger part of humanity, if only because never saying anything to exceed the average person’s own limited grasp of the situation. Perhaps we should be asking for more, and not just of our experts! Pride is something one must earn, first and foremost, for one’s self. There is important work, then, to do in telling the larger story of what the dreamers of computing were aspiring toward. It is, moreover, very difficult work. It must escape the clichés of ill-proportioned metaphor, the marketing jargon of “easy-to-use” commodity and service computing, and the unreal, magical physics of pop-fiction. Yet it must simultaneously operate within those terms, so as to meet and empower the overwhelming bulk of individuals who have known little-else. To be transcended, such common places must be made new. To our opportunity, new media have always transcended the discrete, neat boundaries into which we typically categorize and frame life. In a funny way, by an unfathomable amount of brute-forced gradient-descents down the topology of amalgamations of human language and media, AIs are only-now noteworthy for having begun to transcend and surrounded those same boundaries. What is their larger story? The Story of AIBack in the 1950s, two schools of thought existed regarding the nature of artificial intelligence—that is, the use of computers to simulate or become something like a human mind. The first one, instantiating the field which we now call “cognitive science,” was inaugurated with the work of Herbert Simon and Alan Newell. It stipulated symbolic manipulation, according to logical rules, to be the ground of human thought. The most notable computer language for constructing these rules is LISP, developed in the late 1950s by John McCarthy at MIT. LISP has long-reigned as the progenitor of the fundamentally-modern features of all subsequent programming languages, most notably for its easy notation of mathematical recursion. The second school, the one notorious today, entails modelling fundamental features of the human nervous-system itself. Instead of being written in computer programming languages, languages like Python merely scaffold and initiate a very, very long process of simulating neurons at a much lower-level. The AI itself is the resulting operational model of a vast sea of such virtual neurons, after they have been fed the information from which they have slowly and inscrutably learned. The first major theorist of this strategy was Frank Rosenblatt, a man whose work went into remission for two decades owing the early practical-successes of the first school. It wasn’t until the 1980s that this neural-network based approach to AI was able to re-establish credibility with the creation of more powerful, parallel processing computers. The Wider PictureThat’s the standard early-history of AI in a nutshell, told to everyone interested in the field. But I haven’t yet answered my question. Again, what is their larger story? This standard history happens on computers, but it’s all theoretical. It has only a superficial-relation to computers. The history of computing itself is what I find most pertinent to the story of how our dreams have gone so awry—specifically the vast cultural void within-which should exist the technical history of the development of computers. The meagre offerings of film biopics and prestige television accounts of business success in selling computers offers no nutrition to a public hungry for answers about what they are and what they’re doing to us. Both the symbolic style of AI and the neural-network style of AI are both strategies for simulating of a mind. To put it bluntly: simulating a thinking mind is just one application of computers. The brain’s neurons and symbolic logic are certainly each their own giant, crazy thing. They scale geometrically—combinations of their raw elements explode into infinity in the blink of an eye. And yes, they’re important—perhaps the most important thing ever, I don’t know. But computers are also a whole giant, crazy thing, but they’re technical. And if they contain AI, then their space is bigger. So-long as we’re still building the second thing within the first, I’d argue that it’s important we all understand the first thing to understand the second thing. So what’s a computer, then?An education in mathematics is no replacement for technical or engineering knowledge. And a coding boot-camp or years of experience setting up LAMP servers or administering turn-key back-end cloud solutions only goes so-far in understanding computers as a medium. And, most certainly, all the cognitive science or Python-coding one could do in a lifetime is never going to lead to astute perception of the object sitting on your desk, or stuffed into your pocket. The device, may I remind you, into which you are pouring your mind, body, and soul into every day. The story of computers themselves entail a great deal of university and corporate histories. The stories are indelibly tied-up within the institutions in-which they were made, and the needs of those to-whom they were marketed and sold. Computers have taken over the work of accountants, of scientists, and of office workers. All these people—and their employers—have been extensively profiled by industry psychologists, so as to know how to design “the universal machine” into something they can use and, thus, be motivated to purchase. What you see today as your personal computing device is a work of art; a puzzle-piece fashioned to fit the most vulnerable holes in your human psychology. It made to be as pitifully limited as you are, so as to give you only tiered access to monthly subscriptions to solutions which the entire consumer market has hobbled you to do freely for yourself. For those reasons, any real history of computers would entail deep-dives into marketing, commerce, and lobbying. The money to put computers in classrooms in the ‘80s and ‘90s came from tax-dollars after all—money which was lobbied-for in governments around the world. As an example: for the past twenty years no college degree could be considered complete without mandatory training in Microsoft Office—the de facto standard for “computer literacy” in culture today. The result? Microsoft now comes around to give everyone’s or’fice a “dirty six-point-five” once a month! And not owing any technical benefits, but only by the institutionally-coerced social inertia. Millions of potential hackers are re-directed by education in “computer literacy” every year into founding their mind’s cathedrals, their finest works, in Excel spreadsheets instead of actually programming in a computer environment they can actually own and control. Papert weeps. When everything we popularly know about computers has been socially constructed toward the ends of relinquishing our computing-liberty to major corporations, is it any wonder why our conversations about AI take on the same helpless tone? How can we be proud of AI when the medium beneath its very existence lays in such a shameful state for so many? And how does one even begin to criticize the tech industry when its deepest corruptions are embedded not in the products—which can always be hacked and salvaged—but in our discourse? In the raw perception of our senses? Anonymous HistoryThroughout the calamity of World War II, architectural historian Siegfried Giedion toiled away amassing the material for two major books. He was mailing factories asking for decades-old copies of their parts catalogues, and spent many full days sitting in patent offices. The original documents he spent so much time tracking down were essential for creating what he called his “anonymous history.” Reading Space, Time and Architecture and Mechanization Takes Command in 2018 through to ‘19, I didn’t learn how to make a table, a dentists chair, a modern building, or a modern factory. But I did come to understand the story of these things, through the works and dreams of the many people who made them. His history of the evolution of design in arts, manufacturing, and machines was the story of the environment in which everyone now lives. With the work that went into these two great books, Giedion was striving to reveal, to a warring world, the human heart of techniques it was using only to destroy itself. The field of actual computer development, the origins of software engineering, and the marketing of computers for the masses has been my interest for the past seven years. And just as Giedion showed Marshall McLuhan how to talk about media and education in the ‘50s, ‘60s, and ‘70s, Giedion suggested to me many possible ways how to talk about the technical world in which I grew up. The first step, then, in allowing people to talk intelligently about AI is getting them to talk intelligently about computers. We’ll have no effective criticism until then. And the beginning of teaching them how these technical devices function is to tell them the history, the human story of how they came about. The story of those who have dreamed a better world goes far back, but its episodes accumulate into an ideal aspiration for our own. And it is only by relation to the many anonymous dreamers who have understood and made our technical world can its proportions be revealed to those who dare to cower before them. The symbolic school of AI is ungrounded from the material—its entire domain is logic, floating and abstract within its own perfect imaginary space. The neural-network school of AI is inscrutable—beyond its rudiments it is too complex to fathom. We must, then, cut beneath these discontinuities in our sensibility. And what lays beneath is are the things they run on, computers, and the things they emulate: something which begins as us. Personally, I think that the story of LISP and its influences present a perfect point of deeper exploration, and junction point, for computer and AI history both. The educational Logo language, for instance—which was driven out of schools by office-suite “computer literacy,”—was developed out of LISP. Another is the story of the Free Software Foundation, as a means to frame an understanding of what black-boxing even means. The FSF, after all, kept our planet free from enslavement by proprietary software almost forty years ago. Often maligned and misrepresented by the corporate proxy open-source lobby,—even in scholarly books, I’ve found—their continued work would benefit from your donation. But I think our most vulnerable area, culturally, is the popular acceptance of the reality of cyberspace—that socially-constructed world “behind the screen” into which we pour so much of our attention, and out of which we pull so many real and virtual commodities. The sooner we put cyberspace back into its box, the better we’ll be able to figure out where AI fits into the human picture. Those with the technical knowledge must do the heavy labour of bringing the proportions, and the history, of all these things to the larger part of humanity, so as to give them a voice which resonates with the reality of the situation. You're currently a free subscriber to default.blog. For the full experience, upgrade your subscription. |