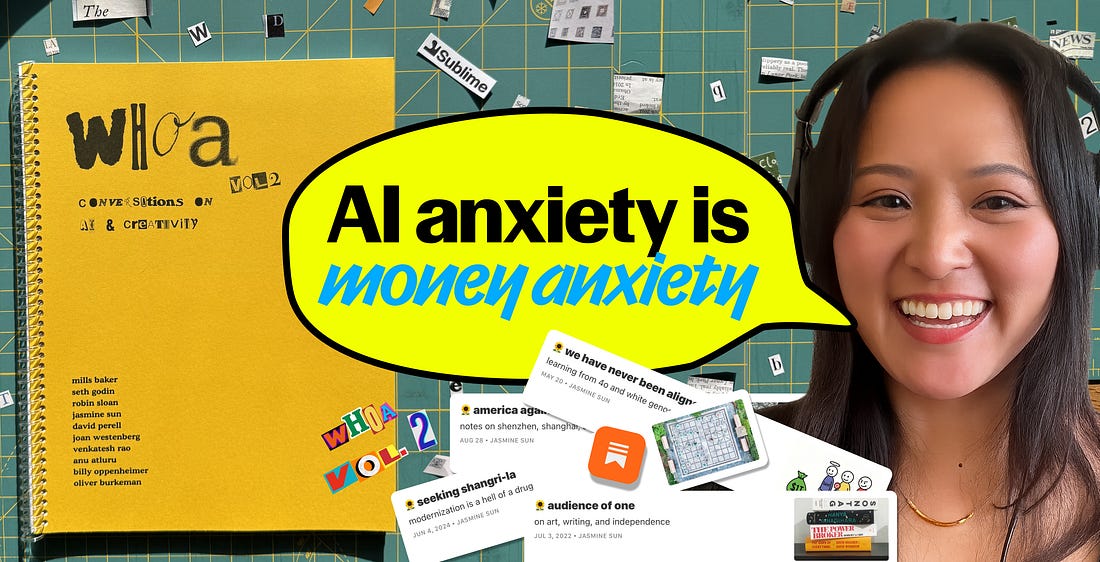

This is the Sublime newsletter, where we share an eclectic assortment of ideas curated in and around the Sublime universe. In case you missed it… we released our annual zine: Whoa, Vol. 2: Conversations on AI and Creativity. We’ll be releasing one conversation here each week. If you’d rather savor instead of scroll + want early access to all ten conversations, including David Perell, Oliver Burkeman, Venkatesh Rao, and Anu Atluru, grab the physical + digital zine. And if you already ordered your copy, the next batch will be shipped this weekend. This series is made possible by Mercury: business banking that more than 200k entrepreneurs use and hands down my favorite tool for running Sublime.Companies are only hiring AIs. You need VC money to scale. This economy is bad for business.If you’ve fallen for any of these oft-repeated assumptions, you need to read Mercury’s data report, The New Economics of Starting Up. They surveyed 1,500 leaders of early-stage companies across topics including funding, AI adoption, hiring, and more.- 79% of companies who’ve adopted AI said they’re hiring more, not less.- Business loans and revenue-based financing were the top outside funding sources for accessing capital.- 87% of founders are more optimistic about their financial future than they were last year.To uncover everything they learned in the report, click here.**Mercury is a financial technology company, not a bank. Banking services provided through Choice Financial Group, Column N.A., and Evolve Bank & Trust; Members FDIC.A conversation with Jasmine Sun

Jasmine is better than anyone else I know at writing about overtired, zeitgeist-y tech things (pssst: agency, taste) in ways that don’t make me roll my eyes. If you’re new to her work, I would start with her statement of purpose (such delicious clarity), her essay reflecting on her four years at Substack (just good!), and her recent essay “Are You High Agency or an NPC?” which captures Silicon Valley’s mood right now better than anything else you will read. In this conversation, we cover:

Or listen on Spotify and Apple. (best if you want to highlight your fave moments with Podcast Magic) The edited transcriptAlex Dobrenko: You were working at Substack, and you left to pursue writing. Did AI factor into that decision? Jasmine Sun: I was at Substack for four years. I left last November. If you had asked me then, I think I would have said, “No, this is totally unrelated.” But once I thought about it more, I do think that AI factored into my decision, but I think it was the other way around. Some people might expect that because AI can generate text really well, you might be less inclined to be a writer. But I think for me, it was actually the opposite. I felt an increasing amount of urgency to quit my product job and write about technology because I had been following AI for a long time. The pace of how fast things were moving and the opportunities I saw for more people to be writing about AI in a humanistic and sociologically informed way made me much more excited about writing. I didn’t have enough time and energy with my job to follow AI as closely as I wanted to. AD: There’s so much energy around all this stuff. It feels so alive, both positively and negatively. Where do you fall? Are you an optimist or a pessimist? JS: I don’t think I feel optimistic or pessimistic about the tools themselves. My optimism and pessimism aren’t about technologies; they tend to be reserved for humans. I feel particularly optimistic or pessimistic about either humanity broadly or particular humans or particular organizations’ ability to wield tools toward whatever purposes. I tend to be curious, and I like things that are interesting. I think AI is really interesting, so I’m having fun thinking about it, using it, and studying it. That doesn’t mean I think it’s going to have mostly good effects, but there’s energy, there’s dynamism, there are so many age-old philosophical questions: “What is creativity? What makes a human?” It’s so fun to have a new thing show up that allows us to rehash and reframe these conversations in productive ways. I tend to be a long-run optimist about humans’ ability to direct technologies toward our own flourishing. I think it will be hard, and it will require a lot of stumbling. Some bad stuff is going to happen for sure. I’m pretty worried about some of the short-term psychological and economic impacts, but I generally believe that we’re going to get through it. AD: I want to talk about your creative process specifically. You’re writing a book with NYT journalist Kevin Roose. How are you using AI for that? What do you find it to be great at, and what do you find it not to be so great at? JS: I use AI to some extent in most parts of my process. Very few parts are fully automated, but it assists me with many. Mostly with background research. By background research, I mean I’m trying to build a basis in a relatively new topic, or I’m going to interview someone and I need to understand their research papers. I will use AI in accompaniment with trying to look at the paper itself, because I don’t have the technical background to read it myself. AI is a super useful tool in helping me grok it better. I also use AI in last-mile revision. I might have a draft of an essay or the ideas, but maybe I’m trying to find a word on the tip of my tongue, or I feel like I’m relying on the same sentence structure too much. It’s really helpful to just talk to an LLM and be like, “Give me ten ways to rephrase this sentence.” That usually gets the juices flowing. I don’t use it as much for idea generation. I don’t think AI is very good at coming up with ideas yet. And I don’t use it very much for drafting prose. In terms of writing style, it tends to come out pretty canned most of the time. There are ways to make it better, but overall, I am using AI mostly for research and last-mile revision, not for drafting and not for ideas. AD: Do you fear at all that it will become as good as you at writing like you? JS: I’m very curious to see when it will happen. I try to make it do this all the time. I have a Claude project and a ChatGPT project where I uploaded 15 examples of my writing from a ton of different things. Sometimes what I’ll do, maybe about once every two months, or whenever a new model comes out, is give it a bullet point outline of something I’m about to write. And then I’ll be like, “Write this like me.” The interesting thing about that is, does it sound perfectly like me? No. Would I consider it an extremely good piece of writing? No. But it does pass the plagiarism detectors. It will generate a piece of writing that sounds sufficiently like my style such that none of the AI detectors will know that it’s AI. I could use it as a first draft and feel pretty comfortable working from there. Or if it was a kind of low-stakes thing, like a grant application or some crappy email or whatever, I would be okay with it. I wouldn’t use it for my real writing, but I’m not that scared of it. I just believe I have other advantages as a human. So if the AI makes it so it can do that too, then I will lean on other things or I will just change up my style, right? Part of being creative is evolving your style all the time, from piece to piece, from album to album, whatever. So, okay, I’ve got to evolve faster. That’s fun. AD: You’re spot on in that it’s a very short-sighted fear. Because the next step after that, if that is true, is, “Okay, we’ll just do something else.” But it is a big fear. JS: Yeah. One interesting example is the AlphaGo moment. In 2016, DeepMind built AlphaGo, an AI that played Go. At the time, Go was considered the most intuitive, most creative, most human board game to exist. It ended up beating Lee Sedol, who is considered the best player of the decade. At first, it made a move that was considered incredibly creative, incredibly unusual. Everyone was shell-shocked. But then what happened next is Lee ended up coming up with moves that he personally would never have come up with. He later admitted that AI helped him step up his game because it challenged him to be more creative. It played like no human had ever played, and that forced him to think in new ways he hadn’t done before. And he never lost a game after that to any human. I think we’ll increasingly be in a world where, yes, humans are benchmarking AI’s abilities and trying to evaluate them and coach them. But I think equally, AIs will be teaching us and benchmarking us and forcing us to push our frontiers forward. AI teaches me stuff all the time. I’m generally pretty excited about this. AD: I had this one line that I put in a newsletter that was AI-generated. And then somebody emailed me and was like, “This line was incredible.” And I felt so messed up about it. So I wrote a confessional. And when I posted that, a lot of people commented and were like, “Dude, I’m not going to read your writing if you use AI.” JS: My God. This is why I started over-aggressively telling people that I was using AI pretty early. For what it’s worth, I’m not even sure that I’ve ever copied a whole sentence. I mostly use AI for research or to help me pick one word. But I’m not even against copying whole sentences. I think people are really ideological and tribal about it, which is why I just pretty clearly state that I’m mostly pro-AI, or I think AI has a place. And if that’s not your thing, go read somebody else’s work. AD: There is a purity test thing to it. JS: Yeah, I was talking to a friend of mine who’s a film director, and in film, there are very similar anxieties as in writing where people are very vocally anti-AI. But he’s like, “I will watch a film, I will know because I’m a director too where they have used AI for small things, to adjust a little thing or put a building in instead of CGI.” Then he says he’ll see those same directors go up on stages at film festivals and talk about how evil AI is. So I think we’re in this crazy phase where way more creatives are using AI than they admit, which is why I actually think it’s more important to talk about it. I think the first people to talk about it will get so much from other creatives, because it feels like a betrayal. But everyone’s using this stuff. So I’d rather be transparent about it and about how I’m using it. So I think we’re in this crazy phase where way more creatives are using AI than they admit, which is why I actually think it’s more important to talk about it. I think the first people to talk about it will get so much from other creatives, because it feels like a betrayal. But everyone’s using this stuff. So I’d rather be transparent about it and about how I’m using it. AD: Totally. JS: I mean, let’s take the sentence thing. You chose the line. You put it in context, right? This is why people want to feel like there is a human force that was motivated to write about this topic, that had a personal reason they did, that put effort into putting the things together and curating it. You are still that person who’s put in this effort. That’s what people are connecting to when they read the line. They’re not necessarily connecting to the same arrangement of words if they came from a totally different context. That’s where I tend to think that the maker behind creative works has always been an incredibly important part of the story, more so than people understand, but it will become even more important because people want to feel that there’s a personal stake there. Most writers are borrowing and remixing other people’s ideas all the time. I cite my sources when I remember, but I’m sure sometimes I don’t cite my sources because I forgot and I thought it was my own idea. But the point is, I curated it, I rearranged it, and that was my work. You’re probably putting in enough effort that there is a real you there. If you had churned out your whole post in a minute because you copied a full essay into a post, people would feel like there’s no you in there. It’s kind of like the Ted Chiang essay he wrote where he’s like, “It’s all about choices.” The problem with LLMs is that they make your choices for you. But the reason that most people aren’t mad if you use an LLM as a thesaurus is you’re still making the choice. That’s one of thousands of choices you’re making in that essay. So I think people just want to feel like you, a human, made some choices. AD: Yeah, that you cared, that you put time into it. To your point about how this is going to shake up how we think about art, I think it’s going to really change how we think about ownership and ego. Not the ego of the artist, but the person receiving the art. JS: Yeah, I think so. I’m sure there are randomized controlled trials of this. Have you ever seen those YouTube videos where someone serves instant ramen at a restaurant, but then they make it in a Japanese style proper fancy restaurant setting? And then they trick all these restaurant-goers who are like, “Oh my God, look at the noodle texture!” And it literally came from a packet of Shin Ramen because it’s the illusion of there being effort that makes people enjoy the experience more and rate it more highly. But then they feel betrayed that it was instant ramen the whole time. AD: Totally, and it gets to weird ideas of disclosure, right? Because why do I have to disclose that I used AI? Do I also have to be like, “Here’s what I ate for breakfast that morning,” or “Here are the drugs that I took?” JS: Yeah, or are you going to now cite Wikipedia? Like, “I looked up this guy on Wikipedia.” AD: Exactly. It gets weird fast. What do you think it looks like when we’re done with that? JS: I think we’re in a transitional stage. I don’t think it will ever be done. I think the technologies will get better, and then we’ll get over the thing about using AI for research. Then, I personally think AI will get better at generating prose. And then we’re going to have to deal with things like, “Can you read entire books written by AI?” And, “Is that embarrassing? Do you say that you do?” I think that as the tech gets better, we’re just going to have new questions about the frontier of it while the old ones fade into the background. It’s interesting how fast people get used to things. We passed the Turing test. People thought it would be a bigger deal that we passed the Turing test, but we passed it, and we’re just like, “Yeah, obviously a chatbot can sound like a human.” This is actually one of the things that annoys me with people who are extremely anti-AI in the writing space, they’re like, “AI is so bad at writing. It’s so generic. It’s so low-brow.” I’m not saying AI’s prose is as good as most very good writers, but two years ago, it couldn’t even write at an eighth-grade level, and now it’s writing at an undergrad level, and you never acknowledged that it had gotten that much better. Two years ago, you were like, “It can’t even string together a sentence,” and now it can, and now you’re like, “But it’s not prose.” AD: Well, I understand it as defensiveness, encroachment on one’s perceived humanity, right? JS: I don’t know. Other writers are better than me, and I’m not threatened by that. There are many writers who are better than me in this world, and I feel motivated by that. I can learn from those people. I can closely read their work and try to figure out how to get better myself. And I think I have other reasons that people might want to read me. AD: It seems like you have a “we’ll figure it out and it’s fine” attitude. JS: It probably does. There are different jobs where I think it matters more and matters less, right? If I were an illustrator who spent decades getting really good at making illustrations, and I was doing corporate contracts, freelance work, and now all these corporations have decided that they actually don’t really care that their images look that good, so they’re just going to use more generic ones from AI, and I lost my living—that would really suck. I do worry a lot about the economic impacts of AI. I think there will be a lot of short-term economic displacement, and I don’t think we have good policy solutions for that. The thing that annoys me is that a lot of the creativity anxiety is actually economic anxiety. It’s “I am worried that this thing is going to take my job and I will no longer be able to make a living.” I think that is a super valid anxiety that we need to be talking about more. I just wish it wasn’t couched in all of this sort of arts elitism stuff about how it’s not actually that good and everyone who uses it is an idiot. We can acknowledge that the technology is getting pretty good, and that’s why it is an economic threat. Because actually, the more that you say, “Oh, it’s actually garbage and can’t do anything,” the less you acknowledge that, no, it is good enough to pose a threat to people’s jobs. So that’s actually a problem that we need to collectively tackle as a society. But if you can’t even get to the point of acknowledging that the tools can be useful, we’re not going to solve the economic problem either. So I think it’s just economic anxiety diffused and projected into some weird art elitism stuff. The thing that annoys me is that a lot of the creativity anxiety is actually economic anxiety. It’s “I am worried that this thing is going to take my job and I will no longer be able to make a living.” I think that is a super valid anxiety that we need to be talking about more. I just wish it wasn’t couched in all of this sort of arts elitism stuff about how it’s not actually that good and everyone who uses it is an idiot. AD: It’s a form of denial, right? “I’m going to deny that this is happening by saying it’s not good.” JS: Yeah, this happens in the arts all the time. The people in the mainstream institutions first looked at Substack and were like, “That’s all crappy little blogs. No one even reads that stuff.” It is valid to worry at a mainstream institution about, “Am I going to have a media job in five years? Do I have ownership over my audience?” Those are really important questions. But if the way that you actualize that anxiety is by being, “All those stupid little bloggers, they can’t even write, they don’t even have editors”—that’s just cope. AD: Right, right, right. So what do you think people should do in those positions, in those jobs? What should people do? Personally, I’m going to try to make it work as a writer. But if I don’t, I’m just going to get a job again and I’m going to write on the side. That to me feels like being an adult. I don’t think the world is unfair to me for forcing me to create enterprise value. I can create some enterprise value, and my enterprise value will subsidize me for writing my little blog posts. I’m not sure that it is a human right to be able to earn a living from your art. I think that art is incredibly valuable, but you have to provide value in a society. I’m just a realist about this stuff. Personally, I’m going to try to make it work as a writer. But if I don’t, I’m just going to get a job again and I’m going to write on the side. That to me feels like being an adult. I don’t think the world is unfair to me for forcing me to create enterprise value. I can create some enterprise value, and my enterprise value will subsidize me for writing my little blog posts. I’m not sure that it is a human right to be able to earn a living from your art. I think that art is incredibly valuable, but you have to provide value in a society. I’m just a realist about this stuff. I think the other thing I will say about jobs is that you may automate one portion of it, but an adjacent role will open up, right? Take customer support, for example. Substack uses AI as its first line customer support. There’s this bot called Decagon. We fed it all of our customer support articles, all our past tickets, all our guides about how to grow your audience and how to write a Substack, etc... That bot is really good. If an issue is unresolved by the bot, it will escalate it to a human. So basically, the customer support folks who used to be spending their time answering “forgot my password” tickets nonstop are now doing higher-level support for more complicated questions. Maybe they’re jumping on Zoom calls for things that you actually need to explain, just doing a lot more bespoke, higher-level stuff. That feels good to me. It seems correct that the very smart and hardworking customer support folks are not doing 10 million refund requests a day. So maybe my recommendation would be, okay, maybe it seems like a really big jump to go be a plumber if you’re doing translation. But there are going to be some tasks within your field that AI cannot do yet. I would basically try to find out, “What are the parts of my role that AI is not quite good enough yet?” And “Can I up-level myself to do the premium version of the AI thing, and also to become quite savvy with AI myself so that I understand where the opportunity is?” That’s probably what I’d say. AD: What is your personal AI thesis? The core belief that drives your decisions around these tools? JS: Okay, I have two. I feel like they have to come in tandem. So one is that I do think it’s magical, and I think that everyone should be fascinated and interested in experimenting with how to use it, how it works, what it does, what it doesn’t. So the first is openness and curiosity is my default orientation. But the other thing that accompanies that is that human effort matters. In a workout, if you don’t feel it burn, you’re not really working out, right? I think that cognitive work is the same way. So while I am really interested in using AI, I think there is a sense where, if this is too easy, I’m probably not thinking or learning, and keeping that in mind. I can use this, but I shouldn’t be using it all the time. Sometimes I have to feel the burn in a cognitive sense, and having that balance it out. In a workout, if you don’t feel it burn, you’re not really working out, right? I think that cognitive work is the same way. So while I am really interested in using AI, I think there is a sense where, if this is too easy, I’m probably not thinking or learning. AD: Totally. Sari and I talk a lot about this idea of like, you could take a helicopter up to the top of the mountain or you can hike. And if you don’t hike, you’re still up there, but it’s different. JS: Yeah, and sometimes you can take the helicopter. You don’t have enough time to do the hike, or maybe you broke your ankle. It’s not that you are never allowed to take a helicopter, but that you’re going to get something different out of it if you take the helicopter. AD: Right, there’s a lot of moral judgment of “you cannot take a helicopter.” And you can, but it’s going to be different. That feels better than the “Helicopters are not cool. They’re not allowed. Don’t you dare take a helicopter” vibe. AD: Okay, let’s jump into some examples of how you’re using AI tools. JS: One thing that I find incredibly useful is that I am very aggressive and specific with custom instructions for tasks that I repeat a lot. For example, there are certain queries that I ask ChatGPT all the time. I ask ChatGPT to define new words all the time, or summarize long reports. I ask it to rephrase a sentence all the time. Because I do a lot of reporting, I’m interviewing a lot of people. Frequently before my interview, I will search up a person’s name and need to get their career history, all of their research papers, and some other little things about what they believe or something. So when I notice that I’m doing the same task over and over, I basically create a custom instruction that gives the response in the exact format that I want. AD: And you put these in the settings of the AI, right? JS: Yeah, I literally just put in the custom instruction section of ChatGPT. I used to hate how AI summarized stuff. I thought it summarized things in really unhelpful ways because it would mush everything together and lose the context of the structure. I think that the way you structure an essay or report really matters, so the summary should retain the structure of the original report. So my custom instruction says, “Retain the structure, give a bolded sentence that summarizes the section, then put bullet points for each of the pieces of evidence.” This is a much better summary than the default summary you will get from ChatGPT. So instead of being like, “I hate how ChatGPT summarizes things this way,” or “I hate how it delivers information that way,” I’m like, “You can just make it do it the way that you want. You’re the boss. You’re the human in charge.” AD: That mindset shift of, “You can mold this thing,” I think a lot of people could benefit a lot from right now. So you said ChatGPT is your daily driver. What else do you use and for what purposes? I use Claude for anything that is advicey/therapeutic, and I use it for prose sometimes. It is much more “emotionally intelligent”. It gives more opinionated advice, which I find really helpful. And I’ve warmed up to the therapeutic use case, even though I used to think it was kind of stupid, but now I actually think it’s pretty good. It’s a better writer than ChatGPT by a little bit. And then I use Gemini. Gemini I think is the most underrated, which is Google’s. It looks kind of scary because the UI sucks, and it has all these weird toggles and stuff, but it’s awesome because it has a long context window. You can put in an hour-long interview and transcribe it. It is the only one that will do that because none of the others will take in so much content. So similar to how I have these custom instructions for how to edit podcast transcripts, for example, I feed it an hour-long MP3 file. Then I have a paragraph that I paste in about how to edit it into a transcript that makes sense and is readable. It sped up my transcript editing by hours. AD: It seems like everyone’s just making money with all this. Do you have that feeling of like, man, there’s got to be a way to make money? How would you make a bunch of money with AI right now? JS: Haha. I am not the right person to ask. I live in San Francisco. I have friends at the labs. They’re getting paid millions of dollars a year at age 25, right? There are clearly ways. The correct answer is not to be like me. I will say that even as a writer, by being a writer about AI, I probably make somewhat more money than writing about other things because there is a high demand right now for writing about AI. So I make marginally more money this way, but frankly, I don’t know. I’m not really optimizing for that right now. If I was good at making financial decisions, I would not have quit my tech job to become a writer. AD: Okay, so you don’t have any great ideas for me. JS: There are some stocks you can buy? If you’re an AGI believer, you can go buy some semiconductor stocks. SK Hynix is a good one. I bought NVIDIA at the dip, because every once in a while, people freak out about AI and NVIDIA stocks crash or something. And I’ll usually just buy more. But do not take my financial advice. I don’t know what I’m doing. AD: Okay, that’s going to be the headline: “Jasmine Sun’s financial advice about AI.” Wait, so are you an AGI believer? You’re writing the book on it. Is that a silly question? JS: AGI does not make sense as a milestone. Not a single person can agree what it means, which means that once someone says we’ve reached AGI, everyone in AI will disagree about whether they have reached AGI or not. And that is the one thing that makes me go, you know, this is kind of silly. But I think, “Are you AGI-pilled or whatever?” is a pretty good sociological category for a group of people who think that AI will be able to do most cognitive tasks. There are some people who believe that AI can never do good writing or will never be creative, whereas I tend to believe current AIs are not very creative, but I am an optimist about the researchers and the trainers’ ability to move them in that direction. It might take a while, but it’s going to happen. In that sense, that orientation of, “If we apply enough compute resources toward a problem, AI will be able to do that task,” that is the AGI belief. And it describes the category of people who think a) that will be possible and b) that will be transformative socially. And in that sense, I am an AGI believer. I believe that AI is going to get really good. I believe it’s going to really matter. But do I think it’s going to happen in two years? Like it’s going to cure all diseases in two years and also cause World War III? No, I don’t believe that. One funny thing about living in San Francisco is I have fast timelines for the rest of the world but slow timelines for us. In SF, I’m considered a critic and a bear and a pessimist. Even the idea that people will care that things are human-generated, a lot of these folks are like, “No, people are not going to care, especially the next generation.” Or I’m like, “I want my teachers, nurses, whatever, to have a human face. They can use AI to help them, but I want it to be a person.” A lot of these folks are like, “Actually, I don’t think that’s true. I think that people become very comfortable having relationships, including more EQ-y relationships, with machines.” AD: What do you wish AI could do but fear it never will? JS: Admin stuff, it’s very crappy. AD: Mmm. You don’t think it will? JS: It probably will, but it’s much harder than it seems. Like, “Do my taxes, do my invoicing,” that kind of stuff. AD: Everyone’s making AI predictions. Let’s do a human prediction. Where do you think humans will be in ten years? JS: Still trying to figure out how not to get totally dopamine-hacked by all of the technology we’ve created. Ozempic, maybe. Maybe we’ll all be on Ozempic for everything: food, video games, etc. AD: That’s sad. AD: What question about AI and creativity isn’t being asked enough? JS: Probably the economics one. I mean, it’s the forever question, but how are artists going to make money? AD: That’s a hard one. And a great place to end. |